Algorithms - Lesson 5: Parallel and Distributed Algorithms

Overview

In this lesson students explore the benefits and limitations of parallel and distributed computing. First they discuss the way human problem solving changes when additional people lend a hand. Then they run a series of demonstrations that show how simple tasks like sorting cards get faster when more people help, but there is a limitation to the efficiency gains. Later in the lesson students watch a video about distributed computing that highlights the ways distributed computing can help tackle new kinds of problems. To conclude the lesson students record new vocabulary in their journals and discuss any open questions.

Goals

Students will be able to:

- Explain the difference between sequential, parallel, and distributed computing

- Calculate the speedup of a parallel solution to a problem.

- Describe the benefits and challenges of parallel and distributed computing.

Preparation

Collect the manipulatives you will use for the main activity. While decks of cards are suggested, other manipulatives are possible. See the teaching tip in the main activity for suggestions.

Links

Purpose

This lesson is a quick tour of the challenges and benefits of parallel and distributed computing. It caps off the unit to showcase ways these techniques are being used to push the boundaries of how efficiently computer can solve problems.

Getting Started (5 minutes)

Prompt: Brainstorm a task that you can complete faster if you get other people to help. Whats' the most number of people you'd want to help you and why?

Discussion Goal

This should be a quick prompt to foreshadow the main ideas of the lesson. Students should brainstorm many potential tasks. When they start mentioning the maximum number of people they'd want to help them direct attention towards why that's the case. You might hear that:

- Adding extra people makes it more complicated

- Sometimes extra people doesn't really speed things up

- If you're working with lots of people then if one person is slower the whole group is slowed down

Have students think about their answers on their own, then share with a partner, and then finally discuss responses with the entire room.

Remarks

- As we've explored in this unit, computer scientists are always looking for more efficient ways to run programs. One way to do this is to develop faster algorithms that run on a single computer. Another idea we'll explore today, is figuring out ways to run programs on many computers at the same time. We just talked about some benefits and challenges when many people help with a task. As we'll see, the same is true with running programs on multiple computers. It can lead to some improvements, but also some new challenges.

Card Sorting Challenge

Activity (30 minutes)

Set Up: Place students in groups of three or four.

Distribute: Give one member of each group some manipulatives (e.g. a deck of cards)

Teaching Tip

Choosing Manipulatives: This activity can easily be done with many different types of manipulatives, not just cards. For example, students could sort pennies by even / odd year, sort coins into piles of different denominations, sort blocks by color / size, or sort any other readily available item. Pick whatever makes the most sense for your context.

Challenge 1 - One Person Sort: At the front of the room display the directions for the first sort as well as the clock. Run the clock, and have one student in each group sort the manipulatives with one type on top and one type on the bottom. Have students record their time. Then let the another partner repeat the process.

- Shuffle the cards.

- Put them in a neat stack, face down.

- As quickly as you can, get the cards sorted so all the manipulatives of one type are on the bottom and all of the other are on top.

- Time stops when you have the manipulatives sorted and back in a neat stack.

Record the best time in your group

Challenge 2 - Two Person Sort: At the front of the room display the directions for the second challenge. Run the clock, and two students in each group work together to sort the cards with red at the bottom and black at the top. Have students record their time. If students are in groups of four offer to let the other two students try the challenge.

- Shuffle the cards.

- Put them in a neat stack, face down.

- As quickly as you can, get the cards sorted so all the manipulatives of one type are on the bottom and all of the other are on top.

- Time stops when you have the manipulatives sorted and back in a neat stack.

This time two people can sort the manipulatives.

Record the best time in your group

Challenge 3 - Full Group Sort: At the front of the room display the directions for the challenge. Run the clock, and have students in the full group (of at least three students) work together to sort the cards with red at the bottom and black at the top. Have students record their time.

- Shuffle the cards.

- Put them in a neat stack, face down.

- As quickly as you can, get the cards sorted so all the manipulatives of one type are on the bottom and all of the other are on top.

- Time stops when you have the manipulatives sorted and back in a neat stack.

This time your entire group (three or four people) can sort the manipulatives.

Record the best time in your group.

Debriefing the Challenge

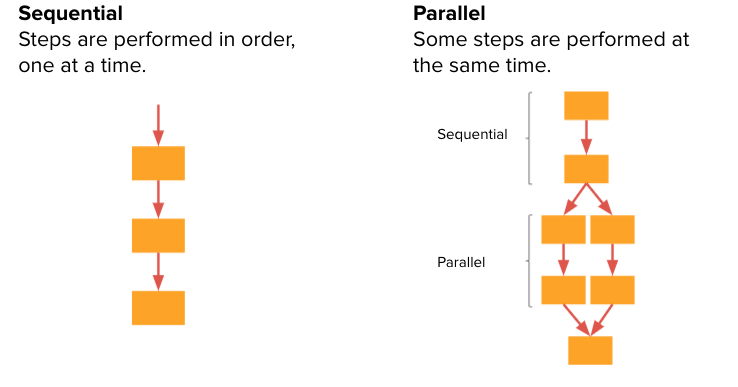

Display: Show the slides explaining the difference between parallel and sequential computing models. Talk through the different models.

Prompt: What portions of your algorithms for Challenges 2 and 3 were parallel? What makes things complicated or slows you down during parallel portions of your algorithm?

Discuss: Have groups discuss responses at their tables before sharing with the room.

Discussion Goal

This discussion has two goals. The first is to reinforce the difference between parallel and sequential portions of an algorithm. Any time multiple processes are happening at once (for example multiple people are sorting cards), an algorithm is parallel. The second goal is to bring up some common challenges that come up when running parallel algorithms. The remarks cover some of the most important ones but the main point is that while parallel algorithms are faster, they still present challenges.

Remarks

A lot of the challenges you just encountered show up when you try to run a program on multiple computers as well.

- Sometimes you need to wait because one computer finished before another

- It can be complicated to split up work and recombine it when moving in and out of parallel portions

- They're faster, but not always as much faster as you think.

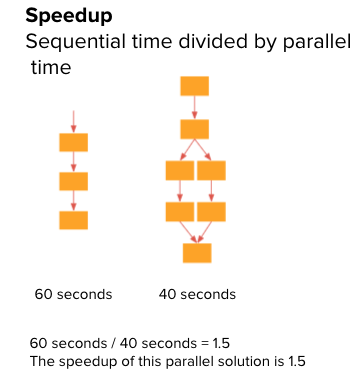

Prompt: What was your group's speedup in Challenge 2? What about in Challenge 3? Are you surprised?

Discuss: Have groups calculate their speedup and share with the room.

Display: Cover the primary points of speed in the real world.

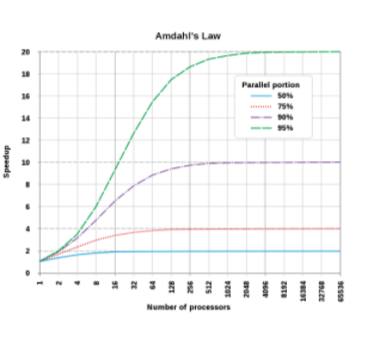

- Students probably noticed their speedup is lower than the number of people helping sort. Sorting with two people doesn't give a speedup of 2. Sorting with 3 people doesn't give a speedup of 3

- Because some portions are always still sequential, the benefits of adding more processors will go down and eventually the speedup reaches a limit.

Discussion Goal

Use this discussion to reinforce how speedup is calculated, but also to prime students to realize that adding additional parallel processes doesn't always lead to the same amount of speedup. During the parallel portion things are in fact moving faster, but sequential portions still take a long time (e.g. collecting individual piles once each group member has sorted their cards). Therefore speedup is rarely your original time divided by the number of computers running the process. Eventually it will level off.

Distributed Computing in Real World Settings

Remarks

We've just explored some of the core and theoretical ideas of parallel computing. It can speed things up, but not infinitely, and it adds complications and many more resources. That said, parallel computing can help tackle some big problems.

Prompt: Before showing the video share the two prompts.

- Why is the type of computing presented "distributed"?

- Why is distributed computing used to solve the problem?

Display: Show the video from the slides onthe international supercomputer project.

Discuss: Have students share their responses to the two prompts:

- Why is the type of computing presented "distributed"?

- Why is distributed computing used to solve the problem?

Distributed computing is very similar to parallel computing. The main idea is that programs can be run on lots and lots of computers. Distributed and parallel computing are helpful for solving really big problems that you couldn't normally solve on a single computer.

Wrap up (10 Minutes)

Remarks

Let's sum up what we learned: Parallel computing consists of both a parallel portion that is shared and a sequential portion.

A sequential solution's efficiency is measured as the sum of all of its steps, but a parallel solution takes as along as its sequential tasks plus the longest of its paralell tasks. Often times a parallel solution will be the fastest option, but there is a limit.

Solutions that use parallel computing can scale more effectively than solutions that use sequential computing. Why is this so? If we continue to add tasks, a sequential solution would continue to get larger and larger. However, with a parallel system, those tasks can be balanced.

Journal

Students add the following vocabulary words and definitions to their journals: sequential computing, parallel computing, distributed computing, speedup

- sequential computing: programs run in order, one command at a time.

- parallel computing: programs are broken into small pieces, some of which are run simultaneously

- distributed computing: programs are run by multiple devices

- speedup: the time used to complete a task sequentially divided by the time to complete a task in parallel

Prompt: Based on what we saw here today, create a list of pros and cons for distributed and parallel computing. Share it with a partner.

Discuss: Have students write their list, then share with a partner, and then finally discuss responses with the entire room.

Discussion Goal

Goal: This lesson covers a lot of ground so this is a good chance to review some of the main points of the lesson. Here's some big ones to cover.

- Parallel programs typically are faster

- Parallel programs don't get faster forever. At some point adding more processors doesn't really help

- Parallel programs can be more complicated.

- Parallel programs can be slowed down if only one of many devices is slow.

- Distributed programs can be run on thousands or even millions of computers which allows you to take on enormous problems

Assessment: Check for Understanding

For Students

Open a word doc or google doc and copy/paste the 2 following questions.

Question 1

What is the speedup of this parallel solution?

Question 2

In your own words, explain why the speedup of a parallel algorithm will eventually reach some limit.

Standards Alignment

- CSTA K-12 Computer Science Standards (2017): 3B-AP-11

- CSP2021: CSN-2.A.1, CSN-2.A.2, CSN-2.A.3, CSN-2.A.4, CSN-2.A.5, CSN-2.A.6, CSN-2.A.7

- CSP2021: CSN-2.B.1, CSN-2.B.2, CSN-2.B.3, CSN-2.B.4, CSN-2.B.5

End of Lesson

You have reached the end of the lesson for Algorithms. To view more tutorials, press the button below or you can go back to the previous lesson pages.